blether -

verb: talk long-windedly without making very much sense. noun: long-winded talk with no real substance.

Friday, June 11, 2021

Creating a Self Signed Certificate with RootCA using openssl

Monday, December 21, 2020

Using Tanzu Service Manager to expose Tanzu Postgres services to Cloud Foundry

There have been a few products under the Tanzu brand which have fairly recently been made generally available. These being Tanzu Postgres for Kubernetes and the Tanzu Service Manager. A very useful combination of products which can be used to make the Postgres database available to applications running on cloud Foundry (Or Tanzu Application Service - TAS) environment.

So what are these products and how to they operate. Firstly Tanzu Postgres, this is simply a deployment of the OSS Postgres database with the support of VMWare. Under the guise of Pivotal there has been a commercial offering for Postgres on Virtual Machines for many years and in recent times the engineering effort has been expended to migrate this wonderful database such that it is containerised and can run on the Kubernetes orchestration engine.

The distribution has been split into a couple of components, firstly the deployment of an operator which can be used to manage the lifecycle of a postgres instance. This makes the creation of an instance as simple as applying a yaml file configuration to Kubernetes.

Tanzu Service Manager (TSMGR) is a product which runs on Kubernetes and provides an OSBAPI (Open Service Broker API) interface that is integrated with the Cloud Controller of Cloud Foundry. It manages helm chart based services that run on Kubernetes and makes them available to applications and application developers in Cloud Foundry such that the service looks like it has a fully native integration.

So if we put these two together we have a fully supported version of Postgres running on Kubernetes that can be made available to applications running in the Tanzu Application Service.

For an example installation of Tanzu Postgres with the Service Manager have a look at my github repo.

Wednesday, September 9, 2020

Deploying Tanzu Application Service to Kubernetes (TKG)

Introduction

Installation

Kubernetes

Tanzu Application Service

Thursday, August 27, 2020

First Experiences with Tanzu Kubernetes Grid on AWS

Introduction

- TKG - Tanzu Kubernetes Grid (Formally known as the Heptio distribution)

- TKGI - Tanzu Kubernetes Grid Integrated (Formally known as Pivotal Container Service - PKS)

Installation

- Download and install the tkg and clusterawsadm command lines from the VMWare Tanzu downloads.

- Setup environment variables to run clusterawsadm which will bootstrap the AWS account with necessary groups, profiles, roles and users. (Only needs done once per AWS account)

$ clusterawsadm alpha bootstrap create-stack - The configuration for the first management cluster can be either done via a UI. A good choice the first time you do this as it guides you through the setup. The UI is available through a browser having run init -ui and then access localhost:8080/#/ui.

$ tkg init --ui

Or you can setup the ~/.tkg/config.yaml file with the AWS environment variables required. (A template for this file can be created by running $ tkg get management-cluster. ). Once setup run the command to create a management cluster.

$ tkg init --infrastructure aws --name aws-mgmt-cluster --plan dev

Testing

Conclusion

Friday, May 18, 2018

Cloud at Customer - DNS

Introduction

In recently weeks I have been involved with a few engagements where there has been a confusion with the understanding of how DNS within a Cloud at Customer environment works. This blog posting will be an attempt to clarify how it works at a reasonably high level.DNS Overview

The Domain Name System is provided to enable a mapping from a fairly human readable form to an actual IP address which computers can use to route traffic. There are essentially two components to the name, firstly the hostname of a machine and secondly a domain name. eg. donald.mycompany.com where donald represents the hostname and mycompany.com represents what is called the domain.On a linux system the resolution of DNS is typically specified in a file /etc/resolv.conf it will look something like this.

# Generated by NetworkManager

nameserver 194.168.4.100

nameserver 194.168.8.100

The nameserver entry specifies the IP address of a DNS server which will be used to change names into IP addresses. We have two entries so that should the first one be unavailable for any reason then the second one will be used. We can then use a unix utility called nslookup (part of the bind-utils package if you want to install it) which will allow us to query the DNS server. Other utilities such as dig can also provide information from DNS.

$ nslookup oracle.com

Server: 194.168.4.100

Address: 194.168.4.100#53

Non-authoritative answer:

Name: oracle.com

Address: 137.254.120.50

Note that this response includes the text "Non-authorative answer". The reason for this is that all DNS servers will be in control of different domains and in our example we tried to lookup a domain called oracle.com. From my laptop the DNS server is from my ISP so it knows nothing about the oracle.com domain. In this situation the DNS server I talked to will forward the request on to a .com domain server which in turn is likely to delegate the request to the DNS server responsible (or authorative) for the oracle.com domain. This returns the IP address which flows back through the DNS servers to my ISP which caches the answer and gives me the IP address to use for a lookup on oracle.com.

DNS on Oracle Cloud@Customer

Inside the control plane of an OCC environment there is a DNS server that runs. This server is authorative over two domains of interest to a customer. Specifically one in the format of <Subscription ID>.oraclecloudatcustomer.com and also one of the format <Account>/<Region>.internal. For example. S12345678.oraclecloudatcustomer.com and compute-500011223.gb1.internal.cloudatcustomer.com domain

cloudatcustomer.com is a domain that Oracle own and run on behalf of the customer and is used to provide the URLs that the customer will interact with. Utilise the UI for managing the rack or accessing the REST APIs for compute, PaaS, Storage etc. So, for example the underlying compute REST API of a C@C environment will be something like https://compute.<Region>.ocm.<Subscription ID>.oraclecloudatcustomer.com. So in our example above this would become https://compute.gb1.ocm.S12345678.oraclecloudatcustomer.com.

Clearly the domain <Subscription>.oraclecloudatcustomer.com is something that is not going to be known in any customer's DNS so normal DNS actions would take place and the customer's DNS would look up the domain chain to find a DNS server that is authorative for oraclecloudatcustomer.com. As these are not publicly accessible this lookup would fail. To fix this we need to change the customer's DNS to make it aware of the <Subscription ID>.oraclecloudatcustomer.com domain so it knows how to access a DNS service that will return an appropriate IP address and enable usage of the URL. This is done by adding a forwarding rule to your customer's DNS service which forwards any request for a C@C URL to the DNS running in the C@C environment.

Once the forwarding rule is in place then the customer is able to start using the UI and REST APIs of the C@C so they can manage the platform.

internal domain

So now the customer can access the cloud at customer management screens and can create virtual machines to run their enterprise. The next question is about what is the internal domain. This essentially relates to configuration of the VM itself. During the provisioning process there is a screen which requests the "DNS Hostname Prefix" as shown below.The name used in the DNS Hostname Prefix becomes part of the registration into the OCC DNS server to represent the host portion. ie. When we create the VM it will have a DNS registration internally like df-web01.compute-500019369.gb1.internal which maps onto the private IP address allocated to the VM. This means if you are creating multiple VMs together or using a PaaS service that produces multipleVMs (like JCS) then there are URLs available to enable them to talk to each other without you having to know the IP address.

Once a VM has been created it is possible to see the internal DNS name for the VM from the details page as shown below.

This can be resolved from within the VM as shown below.

[opc@df-vm01 ~]$ nslookup df-vm01

Server: 100.66.15.177

Address: 100.66.15.177#53

Name: df-vm01.compute-500019369.gb1.internal

Address: 100.66.15.178

[opc@df-vm01 ~]$ cat /etc/resolv.conf

; generated by /usr/sbin/dhclient-script

search compute-500019369.gb1.internal. compute-500019369.gb1.internal.

nameserver 100.66.15.17

So we can see that the default behaviour of a VM created from one of the standard templates is to configure the VM to use the internal DNS server and the DNS Name of the VM is made available through the DNS lookup and will return the internal or private IP address of the VM.

So what about lookups on other domains. Provided the customer DNS allows lookups then the request will get forwarded on to the customer DNS which can provide the appropriate response.

[opc@df-vm01 ~]$ nslookup oracle.com

Server: 100.66.15.177

Address: 100.66.15.177#53

Non-authoritative answer:

Name: oracle.com

Address: 137.254.120.50

The next obvious question is regarding how a client sitting in the customer's datacentre would find the IP address of the VM that was created. There are no DNS records created for the "public" IP address which has been allocated (NAT) to the VM. The answer to this is that it is the same as if you were installing a new server in your environment. One of the steps would be to specify an IP address for the new machine and then register into the customer's DNS. Once you have created the VM and know which IP address is being used for it take that IP and add it to your DNS with an appriate fully qualified domain name. eg. donald.mycompany.com. Once registered any client would be able to lookup donald.mycompany.com and start using the services running on the VM instance.

With Oracle Cloud at Customer it is possible to use an IP Reservation which will reserve an IP address from the client IP pool/shared network and once reserved it is fully under the control of the cloud administrator. Thus the IP address can be registered in DNS before the VM is created if that suits the processes of your company better than registering the address after VM creation.

Tuesday, May 1, 2018

OCC/OCI-C Orchestration V2 Server Anti-Affinity

Introduction

The Oracle Cloud at Customer offering provides a powerful deployment platform for many different application types. In the enterprise computing arena it is necessary to deploy clustered applications such that the app can be highly available by providing service even if there is a fault in the platform. To this end we have to ensure that an application can be distributed over multiple virtual machines and these virtual machines have to reside on different physical hardware. The Oracle Cloud Infrastructure - Classic (which is what runs on the Oracle Cloud at Customer racks) can achieve this by having it specified in what is called an orchestration.Orchestration Overview

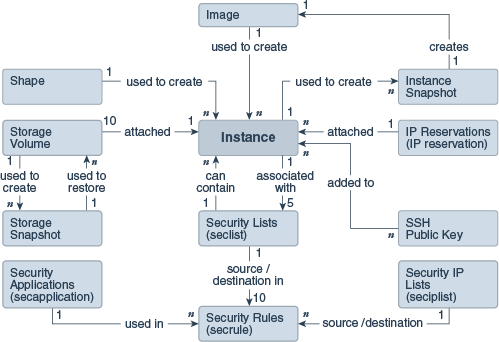

In OCI-C it is possible to create complex environments using multiple networks and apply firewall rules to the VMs that connect to these networks. The VMs themselves have many different configuration items to specify the behaviour. All of this is described in the documentation but the diagram below shows the interactions between various objects and a virtual machine instance. | |||||||

| OCI-C Object interactions (Shared Network only) |

Using orchestrations (v2) you can define each of these objects in a single flat file (json formatted). This file allows you to specifiy the attributes of each object and also reference each other. By using a reference the system is then able to work out what order the objects should be created. i.e. The dependencies. Say a VM will use a storage volume it can use a reference to the volume meaning that the storage volume must come on-line prior to the instance being created.

For example the following snippet of json file defines a boot disk and then the VM instance definition references the name of the volume, identified by the label name.

{

"label": "df-simplevm01-bootdisk",

"type": "StorageVolume",

"persistent":true,

"template":

{

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm01-bootvol",

"size": "18G",

"bootable": true,

"properties": ["/oracle/public/storage/default"],

"description": "Boot volume for the simple test VM",

"imagelist": "/oracle/public/OL_7.2_UEKR4_x86_64"

}

...

{

"label": "df-simplevm01",

"type": "Instance",

"persistent":false,

"name": "/Compute-500019369/don.forbes@oracle.com/df-simple_vms/df-simplevm01",

"template": {

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm01",

"shape": "oc3",

"label": "simplevm01",

"storage_attachments": [

{

"index": 1,

"volume": "{{df-simplevm01-bootdisk:name}}"

}

],

...

There are a few other things in this that are worth pointing out. Firstly the naming convention for all objects is essentially /<OCI-Classic account identifier>/<User Name>/<Object name>. Thus when defining the JSON file content the first part of the name will vary according to what data centre/Cloud at Customer environment you are deploying into and the rest is determined by the users setup and the naming convention you want to utilse. When deploying between cloud regions/Cloud at Customer these values may change.

The other thing to notice is that I have specifically made the boot disk have a persistence property of true while the VM has a persistence property of false. The reason for this becomes clear when we consider the lifecycle of objects managed by a V2 orchestration.

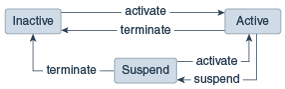

|

| Orchestration V2 lifecycle |

An orchestration can be either suspended or terminated once in the active state. Suspension means that any non-persistent object is deleted while persistent objects remain on-line. Termination will delete all objects defined in the orchestration. By making the storage volume persistent we can suspend the orchestration which will stop the VM. With the VM stopped we can update the VM to change many of its characteristics and then activate the orchestration again which will re-create the VM but using the persistent storage volume meaning the VM retains any data it has written to disk and acts as if it was simply re-booted but now happens to have more cores/memory etc.

Below is screen shot taken from OCI-Classic (18.2.2) showing some of the VM attributes that can be changed. Essentially all configuration items of the VM can be adjusted.

| Basic VM attributes that can be changed in an Orchestration V2 |

These attributes cannot be changed on a running VM - it mandates a shutdown. If the VM had been marked to be persistent then suspending the orchestration would have left the VM on-line and thus with immutable configuration so any change would mandate a termination of the orchestration which would delete things like the storage volumes. Potentially not the desired behaviour.

Using Server Anti-Affinity

Having considered the usage of orchestrations we can now consider setting up some degree of control over the placement of a VM. This is covered in the documentation but here we are considering two options, firstly the instruction to place VMs on "different nodes" and secondly to place them on the "same node". Obviously the primary purpose of this blog posting is to consider the different node approach.different_node Relationships

One of the general attributes of an object in an orchestration is its relationship to other objects. The documentation for V2 specifies that the only relationship is the "depends" relationship and is included into an orchestration using the following format:"relationships": [

{

"type": "depends",

"targets": ["instance1"]

}

] Other relationships are possible, namely different_node and same_node. For a different_node approach we simply have the type set to the text of "different_node" and then in the targets array specify the instances that must be on different nodes. Doing this will also setup a depends relationship as this instance placement will depend on the other instance placement. So for example, with 4 VMs the objects in the orchestration will have the following relationships to ensure they are placed on different physical nodes. ... {

"label": "df-simplevm01",

"type": "Instance",

"persistent":false,

"name": "/Compute-500019369/don.forbes@oracle.com/df-simple_vms/df-simplevm01",

"template": {

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm01",

"shape": "oc3",

"label": "simplevm01",

"relationships":[

{

"type":"different_node",

"instances":[ "instance:{{df-simplevm02:name}}",

"instance:{{df-simplevm03:name}}",

"instance:{{df-simplevm04:name}}"

]

}

...

{

"label": "df-simplevm02",

"name": "/Compute-500019369/don.forbes@oracle.com/df-simple_vms/df-simplevm02",

"template": {

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm02",

"shape": "oc3",

"label": "simplevm02",

"relationships":[

{

"type":"different_node",

"instances":[ "instance:{{df-simplevm03:name}}",

"instance:{{df-simplevm04:name}}"

]

}

],

...

{

"label": "df-simplevm03",

"type": "Instance",

"persistent":false,

"name": "/Compute-500019369/don.forbes@oracle.com/df-simple_vms/df-simplevm03",

"template": {

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm03",

"shape": "oc3",

"label": "simplevm03",

"relationships":[

{

"type":"different_node",

"instances":[ "instance:{{df-simplevm04:name}}"

]

}

],

...

{

"label": "df-simplevm04",

"type": "Instance",

"persistent":false,

"name": "/Compute-500019369/don.forbes@oracle.com/df-simple_vms/df-simplevm04",

"template": {

"name": "/Compute-500019369/don.forbes@oracle.com/df-simplevm04",

"shape": "oc3",

"label": "simplevm04",

...

In the public cloud there is notionally an infinite compute resource so a great many VMs can get placed onto different nodes. In the cloud at customer model there are only so many "model 40" compute units that are subscribed to which puts a physical limit on the number of VMs that can be placed on different nodes. In the example above there are 4 VMs and in a typlical OCC starter rack there are only 3 nodes so the obvious question is what is the behaviour in this scenario. The answer is that the orchestration will enter a "transient_error" state as the fourth VM cannot be started on the rack and the orchestration will try to start up the VM on a regular basis. The error is reported as:-